Essential Guide to Choosing the Right Large Language Model(LLM) for Your Application

Navigating Large Language Models involves choosing between building, fine-tuning, or using off-the-shelf models, each balancing control, customization, and resources according to your specific needs.

Artificial Intelligence (AI) and machine learning (ML) are revolutionizing the world of technology and beyond. One of the most powerful tools in this wave of digital transformation is the Large Language Model (LLM). Large Language Models are AI models that generate human-like text. They can write essays, summarize texts, translate languages, answer questions, and even write code. The versatility of LLMs makes them suitable for a variety of applications. But with a plethora of LLMs available, how do you choose the right one for your application? This essential guide is here to help.

Key Factors for Consideration

First and foremost, it's important to clearly define the needs of your application. What tasks do you need your model to perform? Whether it's answering customer inquiries, generating content, or assisting with coding, the specific requirements of your application will play a significant role in determining which LLM is the best fit. Here's the list of factors to be considered when choosing a large language model,

Model Capability: Understand the inherent strengths and weaknesses of the model. Some models may be more proficient in certain tasks than others.

Out-of-the-box quality: How well does the model perform your task without fine-tuning?

Performance: Speed of the model's output generation can be vital, especially for real-time applications.

Cost: Includes financial expense as well as time and resources for implementation and upkeep.

Fine-tuneability / extensibility: The ability to adapt the model for specific needs or future changes is essential.

Data security: The model should comply with data privacy laws to ensure the protection of user data. How sensitive the data is to pass to LLM vendor

License permissibility: Ensure the model's usage aligns with all relevant licensing terms and conditions.

Expertise Requirement : What are the skills expertise required for implementation

Broad Choices of LLMs

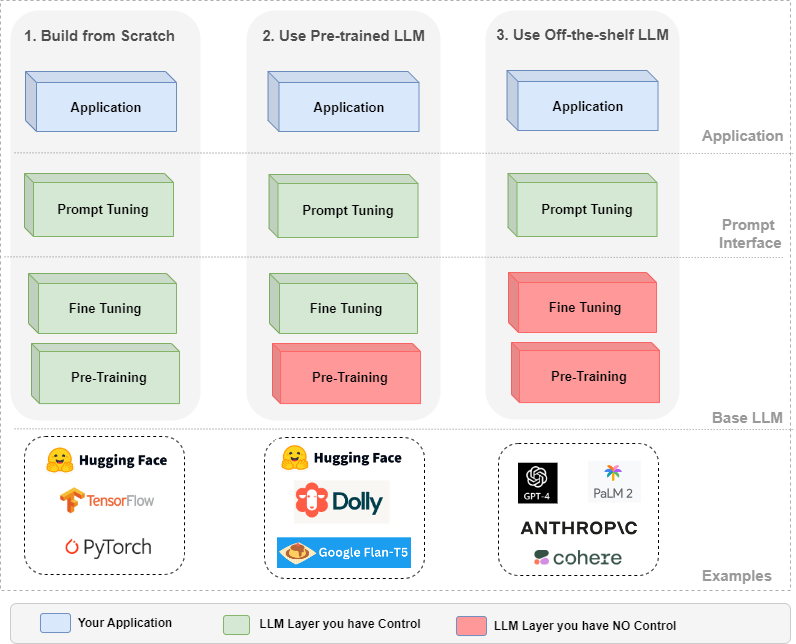

In the diverse landscape of Large Language Models, there are various options you can consider based on your specific requirements and resources. These options typically fall into three broad categories:

Building your own model from scratch

Fine-tuning a pre-trained model

Utilizing an off-the-shelf model.

Each of these paths carries its own benefits and challenges and is best suited to different scenarios. Let's dive deeper into these three options to help you make an informed decision for your application.

1. Building and training a LLM from scratch

This involves creating your own language model, training it from the ground up using a large text corpus, and then fine-tuning it to suit your specific application.

You can build and train your own Language Model using Hugging Transformer library, Tensorflow , PyTorch frameworks

Pros:

Maximum control: You control every aspect of your model, from architecture to training to tuning.

Customization: You can focus on a specific language, style, domain, or topic depending on your data.

Cons:

Resource-intensive: This requires substantial computational resources and a large amount of diverse and relevant training data.

Requires expertise: Designing, implementing, and training a LLM requires significant machine learning knowledge and experience.

2. Fine-tuning a pre-trained language model

This involves taking a pre-existing LLM, such as GPT-4, and fine-tuning it on your specific task or domain.

You can choose the pre-trained open source models like Google’s Flan-T5, Databrick’s Dolly 2.0 and then fine-tune for your use case

Pros:

Easier and less resource-intensive: The model has already been pre-trained on a large corpus, saving you time, data, and computational resources.

Still customizable: While not as flexible as building a model from scratch, fine-tuning can still adapt the model to your specific needs.

Cons:

Limited control: You're reliant on the pre-training, which may introduce biases or behaviours you don't want.

Still requires resources and expertise: Although less than building a model from scratch, fine-tuning still requires substantial computational resources and ML expertise.

3. Using an off-the-shelf pre-trained model

This involves using a pre-trained LLM as-is, without fine-tuning. Instead, you adapt the model's behaviour using techniques like prompt engineering or reinforcement learning from human feedback.

You can choose any of the off-the-shelf LLMs like OpenAI’s GPT-4, GPT-3.5, Google’s PaLM2, Anthropic, Cohere

Pros:

Minimal resources and expertise needed: This approach requires the least computational resources and ML knowledge.

Quick and easy: It's the fastest way to start using a LLM in your application.

Cons:

Least control: You have the least influence over the model's behaviour, which can make it harder to get the exact outputs you want.

Limited customization: The model might not be as effective in niche or specific domains.

Closing Thoughts

Choosing the right Large Language Model for your application can be a challenging task, but understanding your requirements and the available options is a great first step. In the exciting and rapidly evolving world of AI, the perfect model for your project is out there – it's just a matter of finding it. The best choice depends on your resources, expertise, and specific needs.

If you have limited resources or expertise starting with off-the-shelf option like GPT-4 will be the better choice to get started, understand the capability, map the use case. Then consider all factors and choose the right option for the production.