Integrating Custom Knowledge Base with LLM using In-Context Learning

Integrate Large Language Models (LLMs) with your custom knowledge base using techniques - In-Context Learning, Vector DB, Embedding Model, Similarity Search.

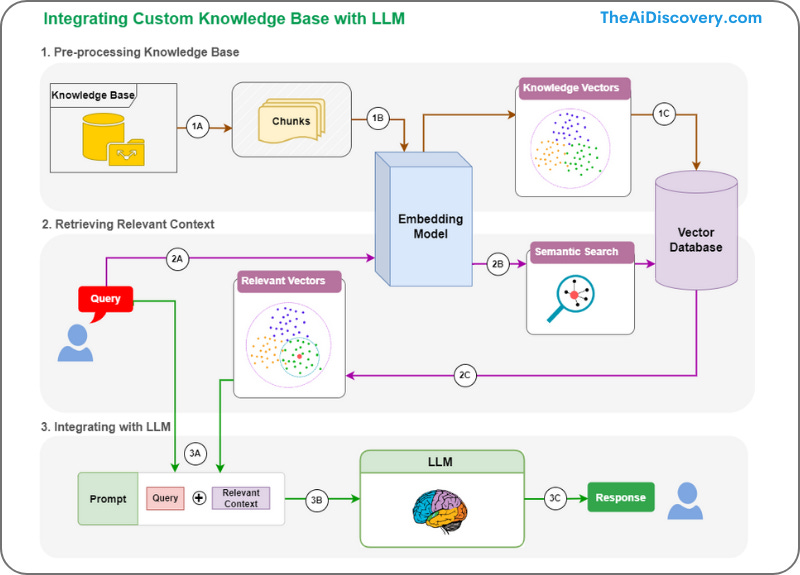

In this article, we will be discussing a powerful approach to boost language model performance: Integrating a custom knowledge base with Large Language Models (LLM) using In-Context Learning. In-Context Learning is a method that leverages the potential of context-sensitive machine learning systems. Let's break down this concept first.

What is In-Context Learning?

In-Context Learning leverages the immediate context or surroundings of data inputs to make accurate predictions. Essentially, it is a method of training AI systems by providing additional context related to a task, thereby assisting in comprehension and response generation. It's especially useful for LLMs, as they can better understand and respond to prompts when they have additional related context.

Here's how you can integrate your custom knowledge base with an LLM using In-Context learning:

Step 1: Pre-processing Knowledge Base

1A. Convert Knowledge Base to Chunks

The first step in this process involves converting the knowledge base into digestible "chunks". A chunk can be a paragraph, a sentence, or any other small unit of text that carries a meaningful piece of information. These chunks serve as a readily accessible set of data, which can be directly processed and used by the LLM.

1B. Convert Chunks to Vectors Using Embedding Model

Next, these text chunks are converted into vectors using an embedding model. Embedding models map words, sentences, or entire documents to vectors of real numbers. They capture semantic relationships between words or chunks, allowing us to represent our data in a form that can be understood by machine learning models.

1C. Store the Vectors and Chunk Mapping in Vector DB

After generating the vectors, they are stored in a Vector Database (DB) along with their corresponding chunk mappings. This allows for efficient retrieval of information later on in the process. Vector DBs are particularly effective as they are optimized for high-dimensional vector data, facilitating rapid semantic searches.

Step 2: Retrieving Relevant Context

2A. Convert User Query to Vector Using Embedding Model

When a user query comes in, it's also transformed into a vector using the same embedding model. This ensures that the query and the information in our vector database are in the same semantic space, allowing for accurate matching and retrieval.

2B. Perform Semantic search of Query Vector in Vector DB

The user query vector is then used to search the Vector DB to find the most semantically similar vectors. The aim is to identify chunks of knowledge from our base that are contextually relevant to the query.

2C. Retrieve Relevant Vector and Mapped Chunks

Once the closest matching vectors are identified, the corresponding chunks of knowledge are retrieved from the database. These chunks represent the contextual knowledge that will be used to aid the LLM in generating a response to the user query.

Step 3: Integrating with LLM

3A. Construct Prompt with Query and Relevant Context

With the relevant chunks and the user query at hand, a new prompt is constructed. This prompt includes both the original query and the contextually relevant information from our knowledge base, thereby enriching the original query with valuable context.

3B. Pass the Prompt to LLM

The constructed prompt is then passed to the LLM. Equipped with both the user's query and additional context, the LLM is now in a much stronger position to generate an accurate, relevant, and useful response.

3C. Get the LLM Response

Finally, the LLM generates an output based on the context-rich prompt. This output is the result of the LLM's deep understanding of the user query in its enhanced context, leading to higher quality responses.

In conclusion, the integration of a custom knowledge base with Large Language Models using In-Context Learning allows us to dramatically improve the performance and applicability of AI systems. It leverages the strengths of both static knowledge bases and dynamic language models to provide the most accurate and relevant responses to a wide range of user queries.