Transforming Enterprise Search using Large Language Models (LLM)

LLMs are reshaping enterprise search, providing semantically precise knowledge synthesis for smarter, faster business decisions.

Navigating the expansive ocean of data within an enterprise can often feel like an endless game of hide and seek, especially when relying on traditional keyword-based search tools. Employees spend countless hours sifting through documents and databases trying to locate the information they need. This quest is riddled with challenges – from irrelevant results to information overload – leading to frustration and wasted time. However, Large Language Models (LLMs) are on the cusp of revolutionizing this process through sophisticated methods like Retrieval Augmented Generation and semantic search, transforming information retrieval into information synthesis.

Challenges in Traditional Enterprise Search

Irrelevant Results

When employees use keyword-based searches, they are often met with a barrage of results that do not align with their actual intent. Keywords are a blunt instrument – incapable of grasping the nuances of human language or the context of the query, leading to a mismatch between what is searched for and what is found.

For instance, searching for "Java" might bring up results about programming, coffee, or the Indonesian island. Without understanding user intent, search results become a wild goose chase.

Information Overload

In an age where data is produced at an unprecedented rate, information overload has become a substantial challenge. Workers wade through a deluge of redundant results, many of which contribute little to no value to their actual informational needs. This not only overwhelms but also obscures the pathway to valuable knowledge.

Imagine an analyst looking for market trends on electric vehicles and getting swamped with everything from blog posts to ancient electric car patents. Too much information is as good as none when it's not the right information.

Productivity Loss

The current systems necessitate a tedious process of refining queries and consulting multiple platforms to piece together actionable insights. The time spent in this labyrinth of searches and cross-referencing could be better invested in tasks that directly contribute to enterprise productivity and innovation.

McKinsey report on “The Social Economy” states that an Employee spend 9.3 hours per week to find a resolution

The LLM Revolution in Enterprise Search

The landscape of enterprise search is undergoing a seismic shift, thanks to the integration of Large Language Models (LLM). These advanced AI-driven systems are propelling businesses into a new era of information retrieval, an era where search engines are no longer mere repositories of data but sophisticated advisors tuned into the nuanced needs of their users. As professionals across industries demand faster and more accurate access to a growing deluge of data, LLMs stand at the ready, transforming the core of enterprise search with capabilities that seemed like science fiction just a few years ago.

Harnessing Retrieval Augmented Generation

Retrieval Augmented Generation (RAG) represents a ground breaking approach in the application of Large Language Models (LLMs) to enterprise search. It’s a methodology that combines the best of both worlds: the information retrieval capabilities of a search engine and the generative prowess of a language model.

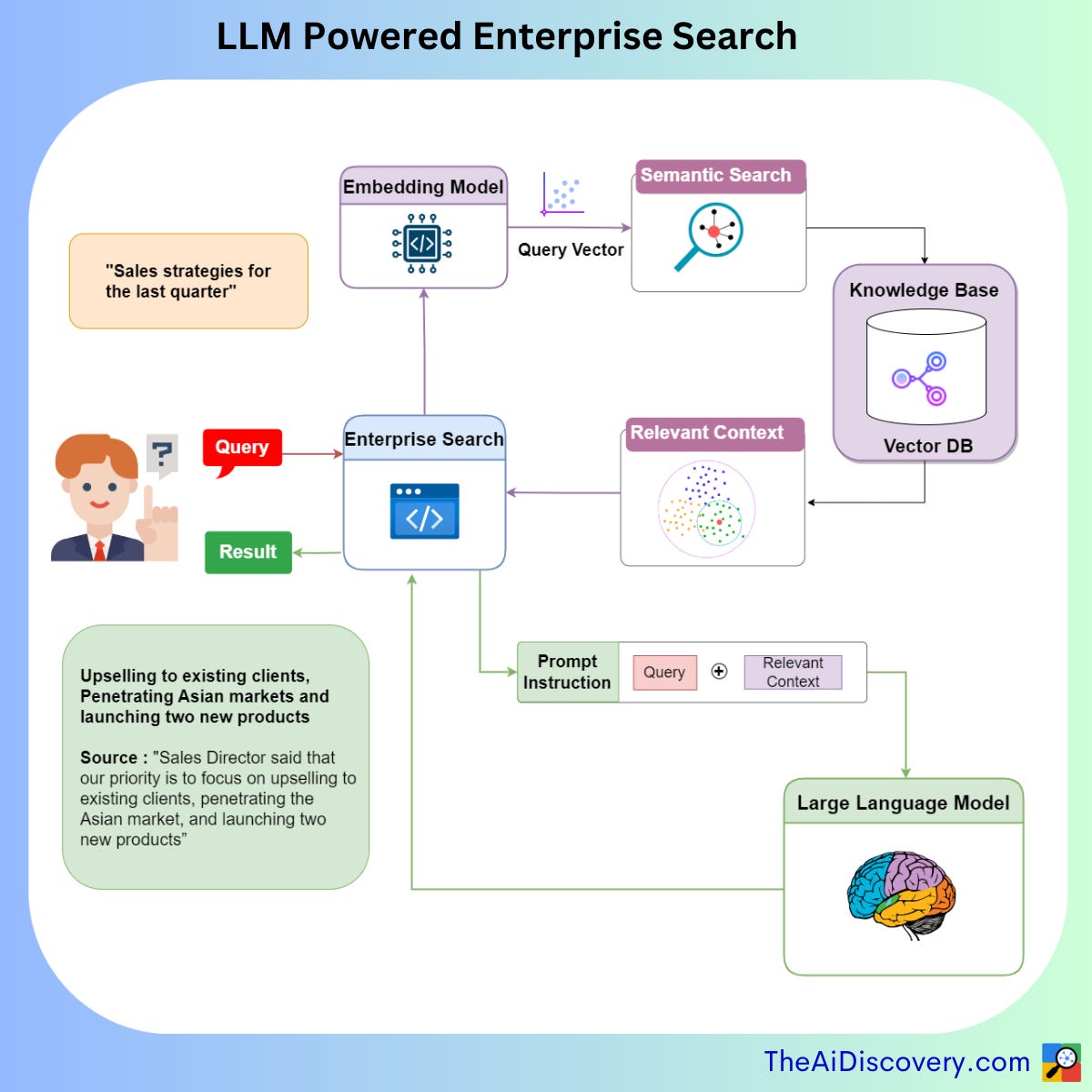

Here’s how RAG typically works:

Retrieval Augmentation Phase: Initially, the system retrieves a subset of documents from a large corpus that are likely to contain the information relevant to a user's query. This is done using Semantic search. The relevant documents are presented to LLM as relevant context.

Generation Phase: Armed with this understanding, the LLM then generates a response that synthesizes the information from the retrieved documents. This response isn't a mere aggregation of excerpts; it's a coherent, often novel, piece of text that directly addresses the user's query. The LLM may combine data from multiple sources, fill in gaps in the information, and even make inferences where data might be incomplete.

RAG is explained in detail in my previous post

Embracing Semantic Search

Semantic search is a paradigm shift, focusing on understanding the searcher's intent and the contextual meaning of queries. Semantic search is used in RAG to retrieve relevant information and pass the relevant context to LLMs which in turn can discern subtleties and nuances in queries, leading to more relevant and precise results. They read between the lines of vague requests and interpret the language patterns to match the user's true intentions.

For example, When a Sales executive searches "sales strategies for the last quarter" in an LLM-enhanced system, he doesn't just trigger a hunt for matching keywords. The system understands the semantic meaning behind his query. It recognizes "last quarter" as a time-specific element that pertains to recent, tactical moves rather than historical data or general sales tactics.

Providing Knowledge Synthesis

LLMs redefine the very fabric of knowledge retrieval by providing synthesis rather than mere retrieval. They analyze, summarize, and integrate information from various sources to present a curated and coherent answer, transforming isolated data points into actionable knowledge. This not only saves time but also elevates the quality of information that employees base their decisions on.

The LLM retrieves a document where the sales director outlined the quarter's focus: “Our priority is to focus on upselling to existing clients, penetrating the Asian market, and launching two new products.” It doesn't stop there; the LLM also pulls in the latest market analysis reports showing the potential for upselling in the existing clientele database and synthesizes this with current economic reports from Asian markets pertinent to the product launch. The final output is a concise, actionable sales strategy that aligns precisely with the company's goals.

Conclusion

The integration of LLMs into enterprise search systems is more than an upgrade – it's a transformation. By adopting LLMs, businesses can ensure that their employees are empowered with tools that understand the nuances of human language, provide concise and relevant information, and turn the tide from information overload to information mastery. The future of enterprise knowledge management is not just about storing information but making it intuitively accessible, and LLMs are leading the way in this new era of knowledge work.